Introduction

In the fast-paced world of artificial intelligence, OpenAI’s Superalignment team is right at the forefront, tackling the exciting challenge of guiding AI systems that outsmart us humans. Let’s take a closer look at their recent adventures as we unravel the intricacies of their mission, the hurdles they’re overcoming, and the cool, forward-thinking methods they’re using to make sure superintelligent AI grows up safely.

Navigating Uncharted Territory

The Quest for Alignment

In July, OpenAI established the Superalignment team with a singular goal: to develop strategies for steering, regulating, and governing AI systems with superhuman intelligence. Collin Burns, Pavel Izmailov, and Leopold Aschenbrenner, key members of the team, recently shared insights into their work during the NeurIPS conference in New Orleans.

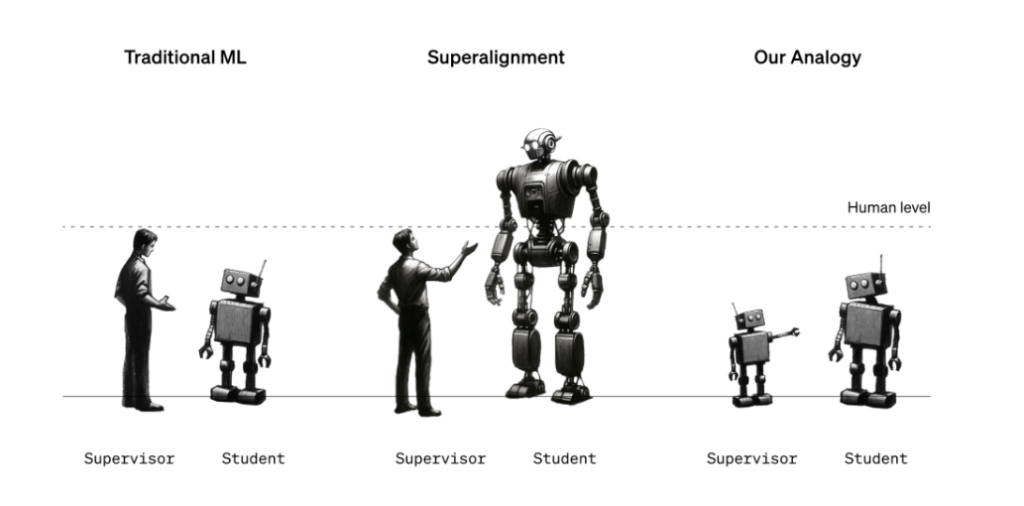

The central challenge, as Burns notes, lies in aligning models that surpass human intelligence, a feat considerably more intricate than aligning those of equal or lesser cognitive capacity. The team acknowledges the enormity of this task but remains resolute in its commitment to finding viable solutions.

Leadership Dynamics

Sutskever’s Stewardship

Guiding this ambitious effort is OpenAI co-founder and chief scientist Ilya Sutskever. His leadership of the Superalignment team raises eyebrows, especially in the context of the recent upheavals within OpenAI. Despite internal shifts, OpenAI’s PR asserts that Sutskever is steadfastly leading the charge to address the profound challenges posed by superintelligent AI.

Debunking Misconceptions

Navigating Skepticism

The Superalignment initiative has stirred debate within the AI research community. Some argue its prematurity, while others label it a distraction. While Sam Altman draws parallels with the Manhattan Project, critics suggest that claims of imminent superintelligence divert attention from pressing regulatory concerns like algorithmic bias and AI toxicity.

Sutskever, however, remains convinced that AI, in some form, could pose an existential threat. His commitment is underscored by tangible actions, such as burning a wooden effigy to symbolize preventing AI harm. Moreover, 20% of OpenAI’s computing resources are dedicated to the Superalignment team’s research, emphasizing the gravity of their mission.

Cracking the Alignment Code

Strategies in Motion

The Superalignment team grapples with the challenge of defining and detecting “superintelligence.” Their current approach involves leveraging a less sophisticated AI model (e.g., GPT-2) to guide a more advanced one (GPT-4) towards desired outcomes. This nuanced approach acknowledges the complexity of superintelligent systems, emphasizing the need for effective governance and control frameworks.

Peer Collaboration

OpenAI’s Call to Action

Recognizing the multifaceted nature of their mission, OpenAI is reaching out to the global community. They are launching a $10 million grant program to fund technical research on superintelligent alignment. This initiative aims to involve academic labs, nonprofits, individual researchers, and graduate students in collaborative efforts to address this paramount challenge.

Unpacking External Support

Schmidt’s Contribution

Former Google CEO Eric Schmidt’s $10 million contribution to OpenAI’s grant program adds a layer of intrigue. As a supporter of Altman and a vocal advocate for AI research, Schmidt’s involvement raises questions about potential commercial motivations. OpenAI, however, assures that transparency and public accessibility will define the dissemination of research findings, including code.

Conclusion

In navigating the uncharted territories of superintelligent AI, OpenAI’s Superalignment team emerges as pioneers, grappling with challenges that define the future of artificial intelligence. Their commitment to transparency, collaboration, and responsible AI development underscores the significance of their mission. As they continue to unlock the mysteries of alignment, OpenAI invites the global community to join hands in shaping an AI future that benefits humanity at its core.